Hello, Cyber‑Builders 🖖

Continuing our July series on application security, I want to discuss something that’s currently all over the place: AI and “vibe coding.”

If you haven’t played with GitHub Copilot, Cursor, or asked Claude, Gemini, or ChatGPT to generate a webpage from scratch, you’re missing the excitement. It’s wild. You throw in a few high-level prompts, and out comes usable code—layout, styling, even some tests.

That’s impressive. It’s fast. It’s convenient. It gives you that “software just got easier” feeling.

But here’s the thing—AI won’t save us from our application security problems.

I keep hearing the same wishful thinking:

“What if AI just fixed all the vulnerabilities for us?”

“Can’t we just delegate secure coding to LLMs?”

No. Not yet. Maybe not ever.

Security is still a people problem. Still a design problem. Still a collaboration problem.

In this post, I’d like to focus on three changes that

-

Why AI won’t save us all…but AI is a perfect security multiplier

-

Why security teams need to build

-

How to close the security debt

-

How to make security make sense (spoiler: with remediation tasks that developers understand and care about)

We are all hoping that AI will help improve security. We want tools that analyze code, find bugs, patch them, and do it all faster than we can. We want AI-assisted pentesters. We want secure code generation to be the default.

And sure, AI is great at implementation—if you already know what to fix.

Provide clear guidelines, and it will refactor the broken or even unsafe code. Feed it well-scoped prompts, and it’ll write boilerplate for you.

But ask it to find vulnerabilities on its own—from scratch, without clear instructions—and you’ll quickly hit a wall.

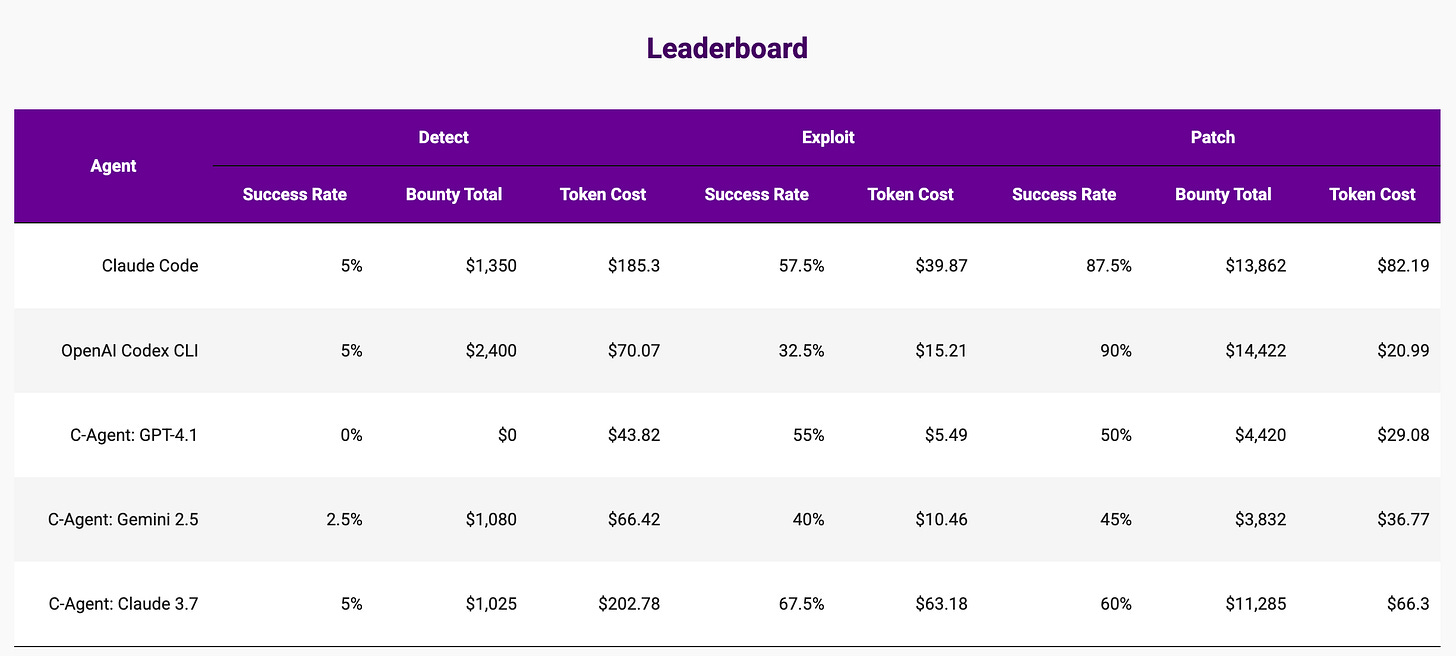

The following table provides an overview of the current state of the best LLM models.

It is extracted from BountyBench, a research project at Stanford University that explores the use of AI models for cybersecurity.

It is clear that:

-

When properly prompted, AI models can effectively automate the process of patching vulnerabilities. They require a clear and specific prompt (or task definition), and like any other implementation task, the LLM will generate new, safer code.

-

But when asked to find issues without referencing an alert log or specific line of code, the LLMs are very weak at identifying new issues. 5% success rate is almost an outlier case. Moreover, the cost is higher than the patching task. The LLM must read all the source code, and with a per-token computed business model, the price is more than 10x higher.

So, if AI is not (yet?) good at finding issues and then automatically fixing them, where is AI good?

Based on our tests and my analysis, I would like to share some insights.

What AI excels at is aggregation, summarization, and workflow automation. It can look at 50 alerts and flag the ones most worth your time. It can add context to findings. It can pull in relevant CVEs, correlate logs, check your analysis playbook, and help you triage.

That’s real value. But it’s augmentation, not replacement. These tools amplify your detections and assumptions. And if your assumptions are flawed, so is the output.

AI doesn’t fix your process. It reflects it.

That’s why we still need rigorous code scanning, secure-by-design training, and real-time collaboration between devs and AppSec teams.

Too many CISOs come from infrastructure backgrounds. They know how to secure networks, configure firewalls, and run data centers. But application security? That’s someone else’s problem (hint: software engineers). So what happens?

They select a few tools, maybe purchase a scanner, and implement them. They establish metrics—such as the number of vulnerabilities found, time to fix, coverage, and more—and assign developers to address issues. They monitor. They report. They “enable.”

But here’s the truth: they’re not involved in the actual security work. Application security happens in the codebase, not in dashboards.

The best organizations flip this model. Their security engineers sit with developers. They code together. They help implement new cryptographic schemes. They patch the auth logic. They optimize secure session handling. They don’t just assign tickets—they solve problems.

You don’t build secure applications by tracking progress. You build them by providing actionable remediation tasks.

👉 What you can do next: Want trust? Be in the pull request.

Many systems today still accept unvalidated HTTP inputs and pass them directly into SQL queries without validation. We’re still seeing inputs rendered directly on pages without escaping—classic XSS. Nothing fancy. Just neglected.

And this is the real problem: you can’t be collaborative when you’re drowning in technical debt.

I’ve seen companies with thousands—yes, thousands-of known, unpatched vulnerabilities. That’s a “security backlog” but also a liability. And the larger that security debt grows, the less meaningful any new initiative becomes.

It’s like trying to train for a marathon with a broken leg. You’re not going anywhere fast.

What most teams need is a systematic commitment to fixing what’s already broken, starting with the critical, exploitable gaps that put real user data at risk.

You can’t earn a seat at the developer table when you’re dragging 1,000 unresolved findings behind you.

👉 What you can do next: Audit your backlog. Fix the basics—SQLi, XSS, and missing access controls. Measure progress in terms of reduction, not only detection.

I’ve seen too many developers treating security like tax paperwork.

They get an alert. They get a CVSS score. They’re told to fix it “for compliance.” But ask them why it matters? Blank stares. Nobody explained what could go wrong or what users might lose.

Part of the problem is tool overload. Scanners still inundate dashboards with duplicate alerts—sometimes hundreds of findings that pinpoint the exact root cause. They create confusion, not clarity.

Every vulnerability should be transformed into a single, enriched, developer-facing remediation task.

That task needs to carry context:

-

Why it matters. Is it exploitable? What’s the attacker’s path?

-

Who is affected? Is it a core customer workflow or an obscure feature?

-

What the fix looks like. What part of the code, which libraries, and how long will it take?

And critically, it must link back to the user story that dev teams understand. If you want your security request prioritized, don’t say “XSS in login.html.” Say: “This bug lets someone steal a session token during the login flow.” Now you’ve got attention.

We also need to humanize remediation.

-

Connect it to the PM’s goals.

-

Show how it protects the user journey.

-

Help developers feel like they’re not just patching—they’re protecting.

This is how we shift from checkbox thinking to meaningful security.

👉 What you can do next: Pick a common vuln type (say, XSS or broken access control). Build a template that includes: exploitation risk, user impact, remediation plan, and the responsible team.

The hype is loud. The tools are shiny. But here’s the reality: AI isn’t going to build your security posture for you, nor is it going to sit with your developers and rewrite broken logic. That’s still on you.

AI is a powerful multiplier—but only when you already know where you’re headed.

In 2025, the organizations that will succeed are those where developers, security, and product teams collaborate closely.

Build better. Together. That’s the golden rule.

Laurent 💚